Set chaingraph

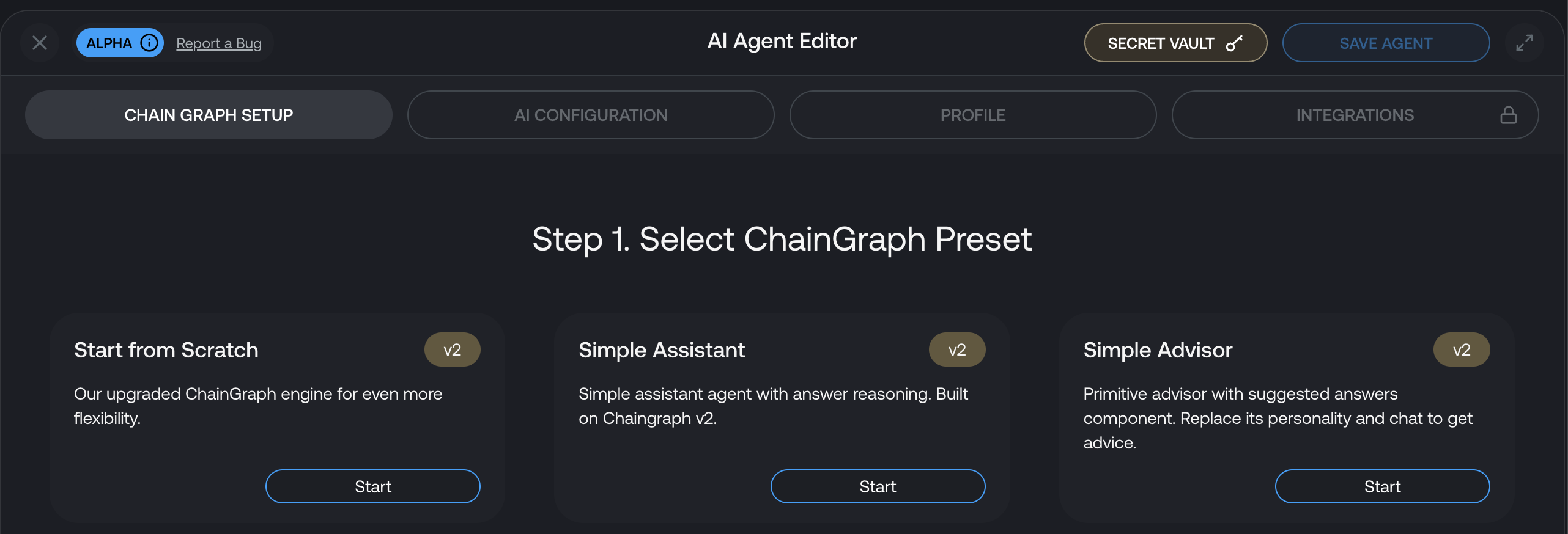

Choose a pre-built template

The fastest way to start is to choose one of pre-built templates. The collection of pre-built templates is being constantly updated with new flows.

When a template is chosen, it will create your personal copy of the flow that you can edit or use as is.

Consider using templates to copy some functions or parts of the flow using import-export-flows.md

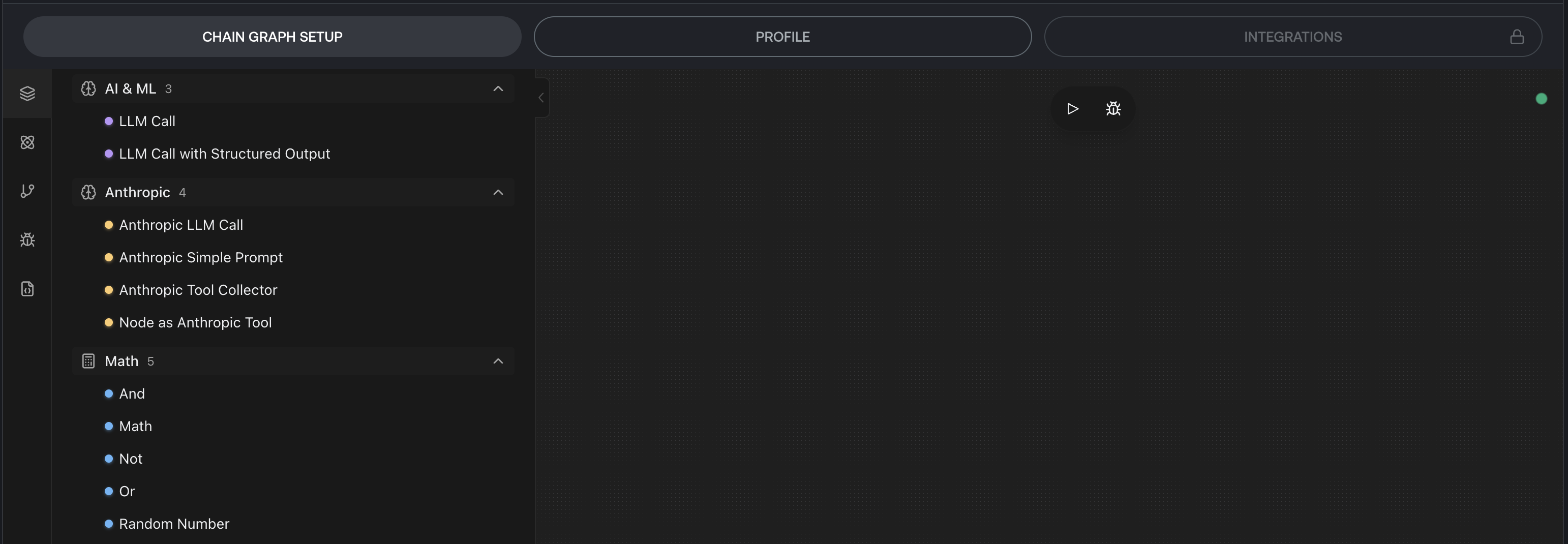

Build yourself

Select Start from Scratch option that will bring you to the empty workspace.

Now, drag the nodes from the left sidebar to build your first flow.

The simplest flow is to get a message, process it with LLM and send a response, let's build it together.

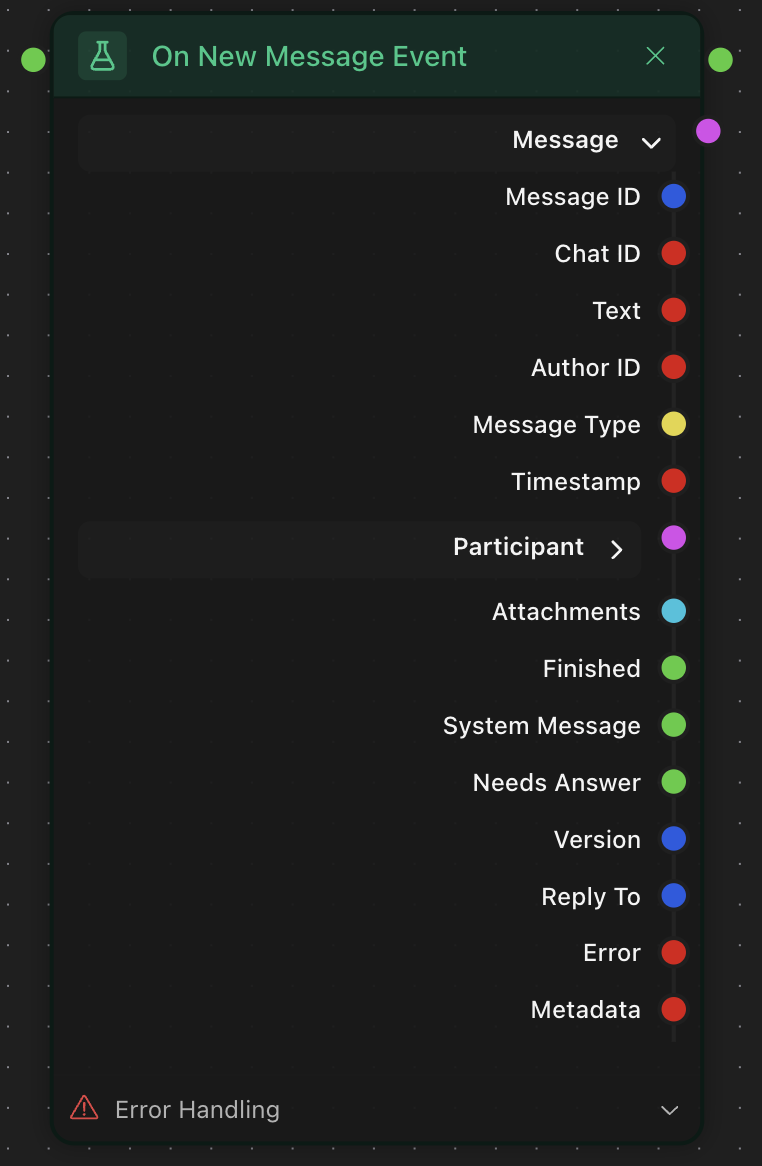

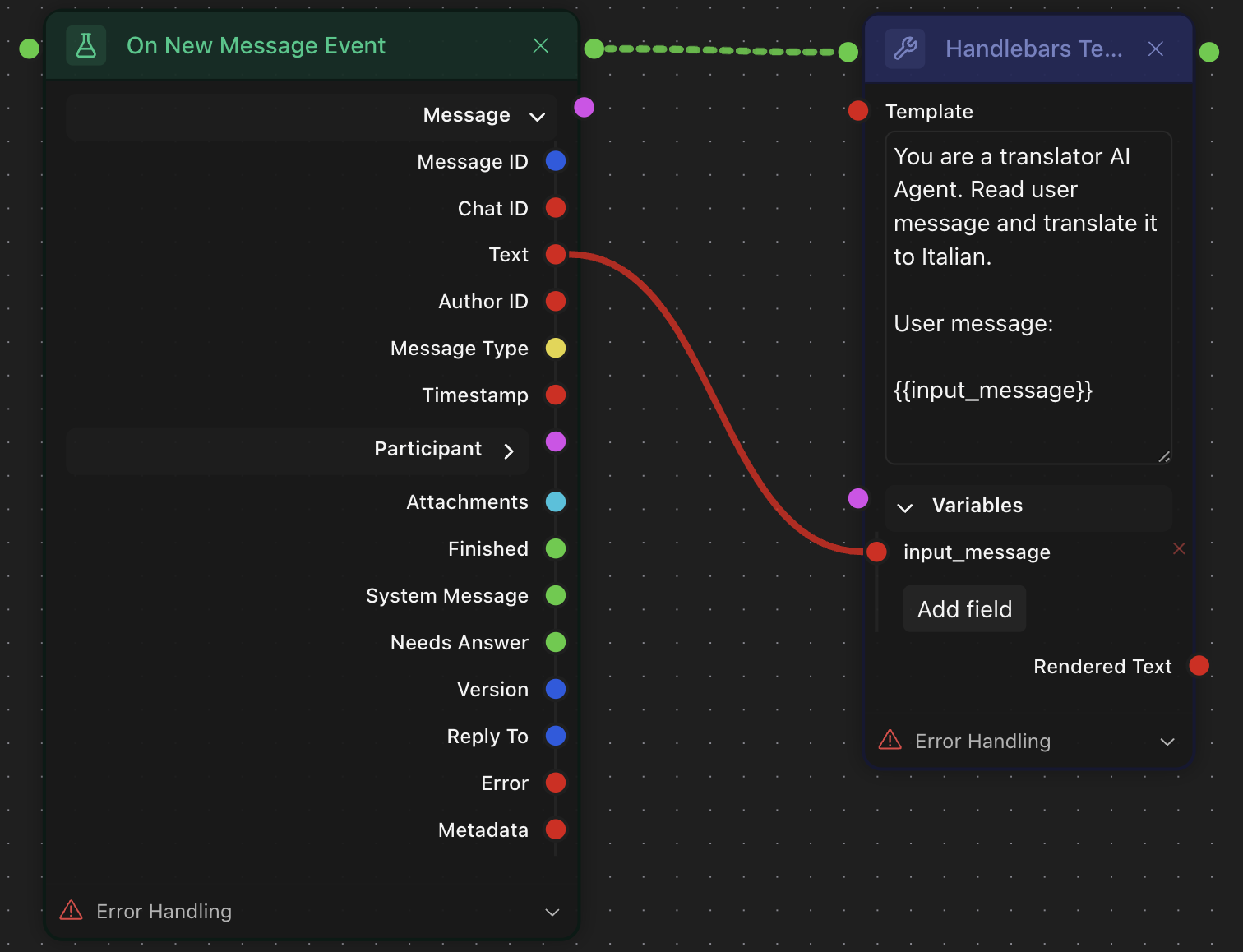

We can get the message from the node On New Message Event. It consists of an object with all chat-related details and is a trigger event for other nodes. Read events.md and flow-control.md for better understanding of how flows and events work in chaingraph.

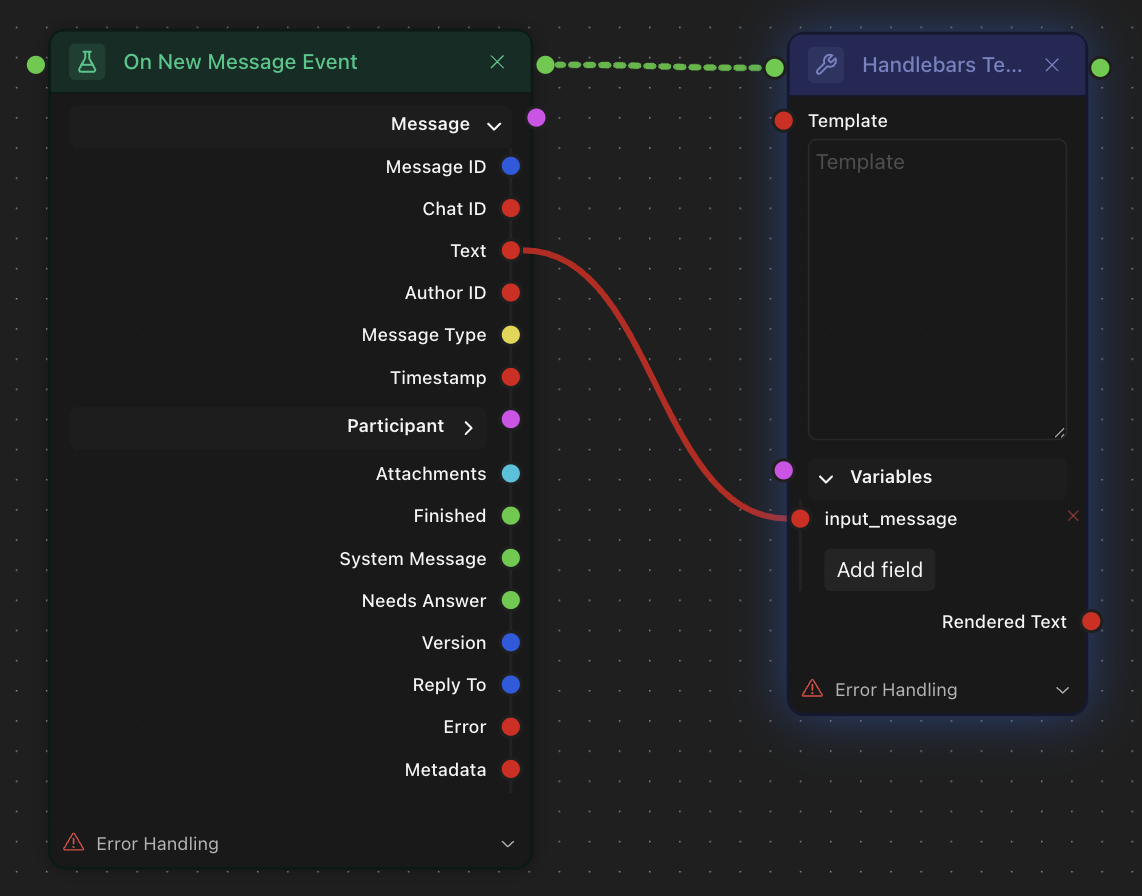

The text of the input message is in the Text port. Let's connect it to a Handlebars Template as input_message variable. This way we can combine both message and a prompt and pass them to LLM node.

To use the variable of string type we should put in double curly brackets like this: Learn more about data-types.md and how to use templates.md in your flows.

Let's prompt an agent to act as a basic translator to Italian.

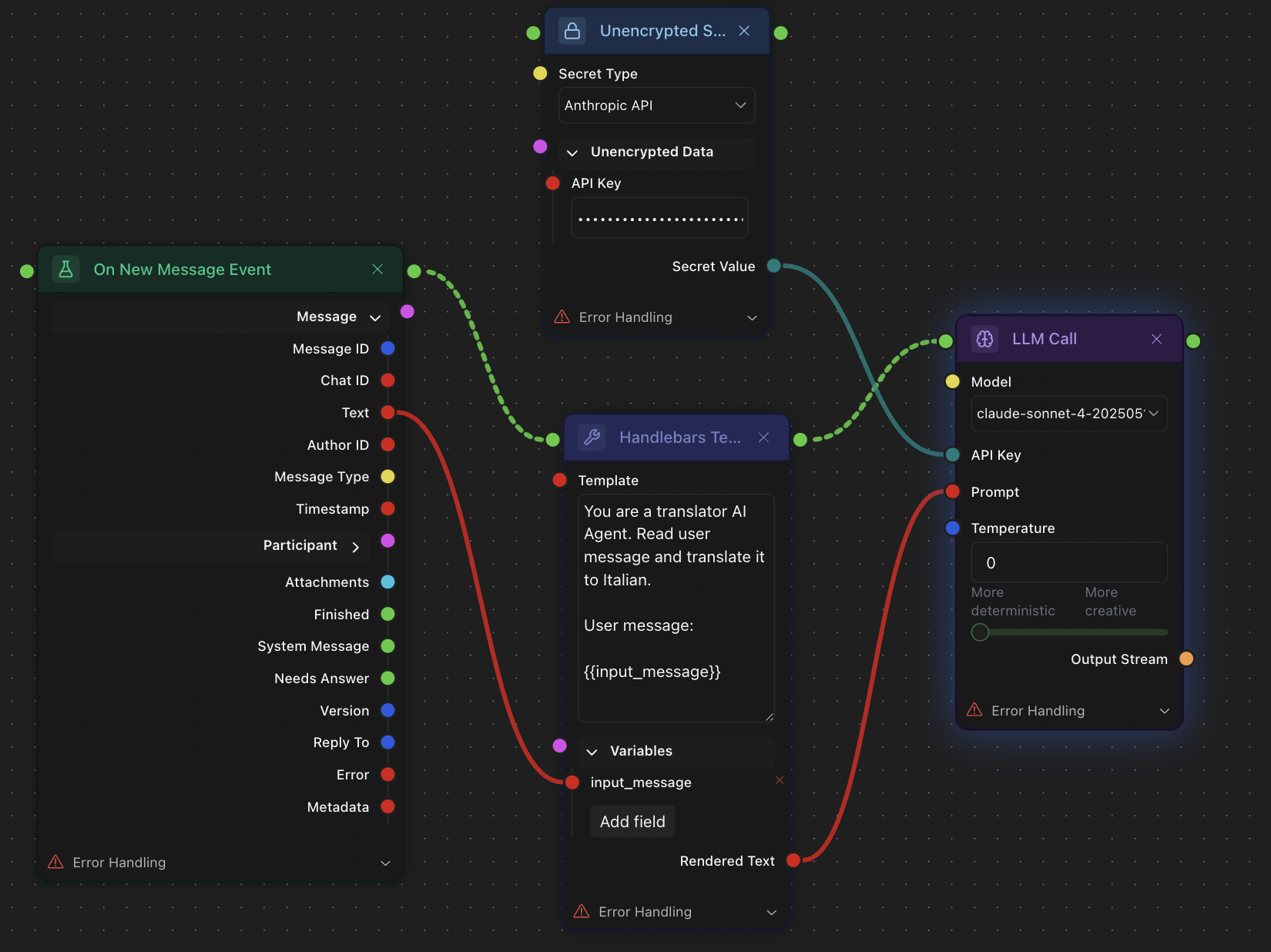

For the agent's "brain" we will refer to this guide calling-llms.md and use LLM Call node with Claude Sonnet 4 model. I connected the instructions with user's message from Handlebars Template to LLM Call Prompt port and added API key (secret-vault.md) from Anthropic console.

Now, as I see the output of the LLM Call is of stream type, i will look for a node that streams LLM response to the chat and connect it.

The job is completed here. The agent receives a message, passes it to the LLM together with a prompt and streams LLM response. It's the time to test-and-deploy.md or set-agent-profile.md.