Calling LLMs

Chaingraph is LLM agnostic, allowing you to mix OpenAI, Claude, on-premise models, or any provider within a single workflow to optimize for both speed and cost.

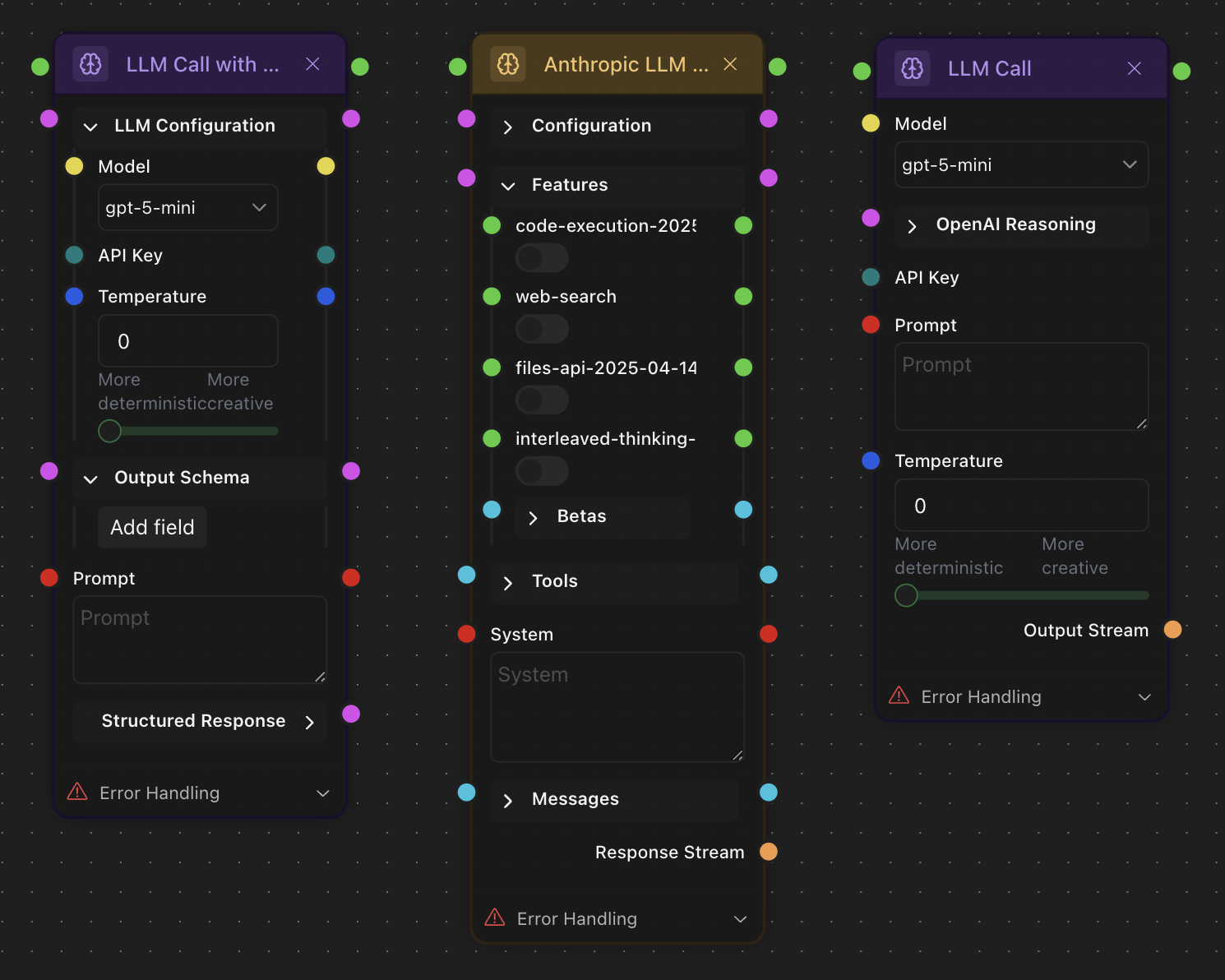

LLMs are integrated in the chaingraph through the nodes. Currently OpenAI, Anthropic, Moonshot and Deepseek LLMs are integrated. For different tasks you might want to use different nodes.

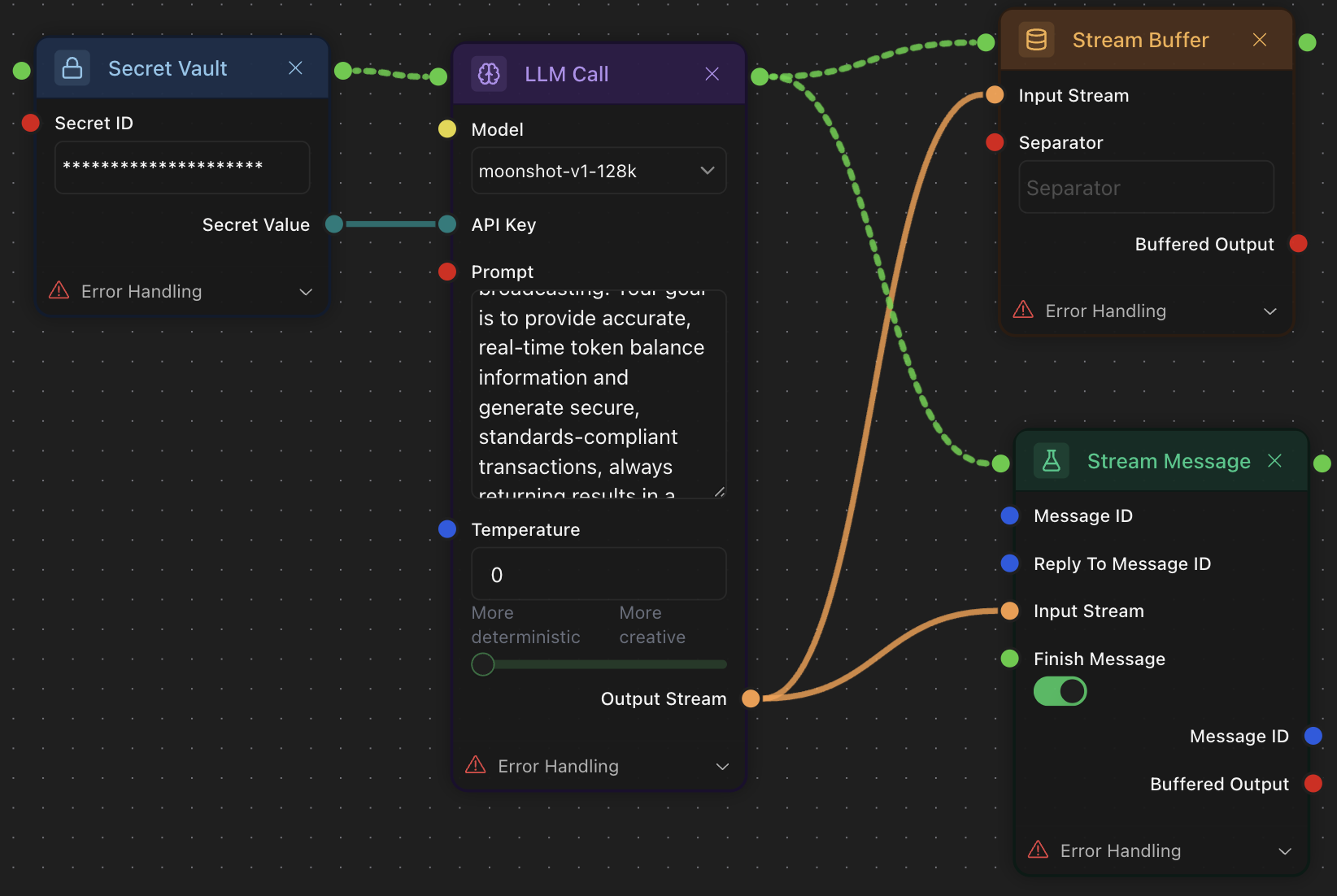

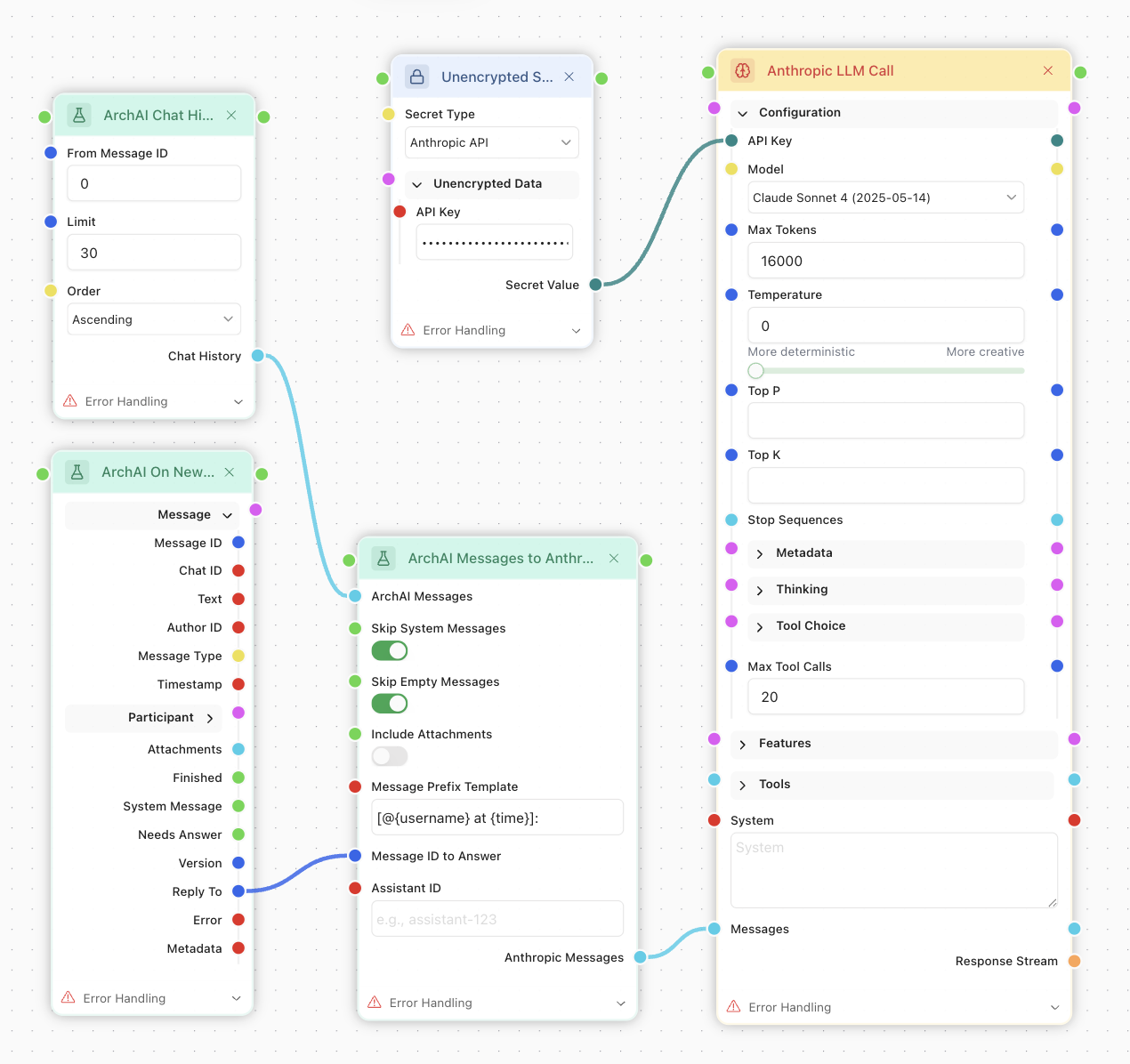

Regardless of the node choice, API key is required for every LLM. To obtain one, consult with the LLM provider documentation. You can add your key using either secret-vault.md, or Unencrypted Secret node.

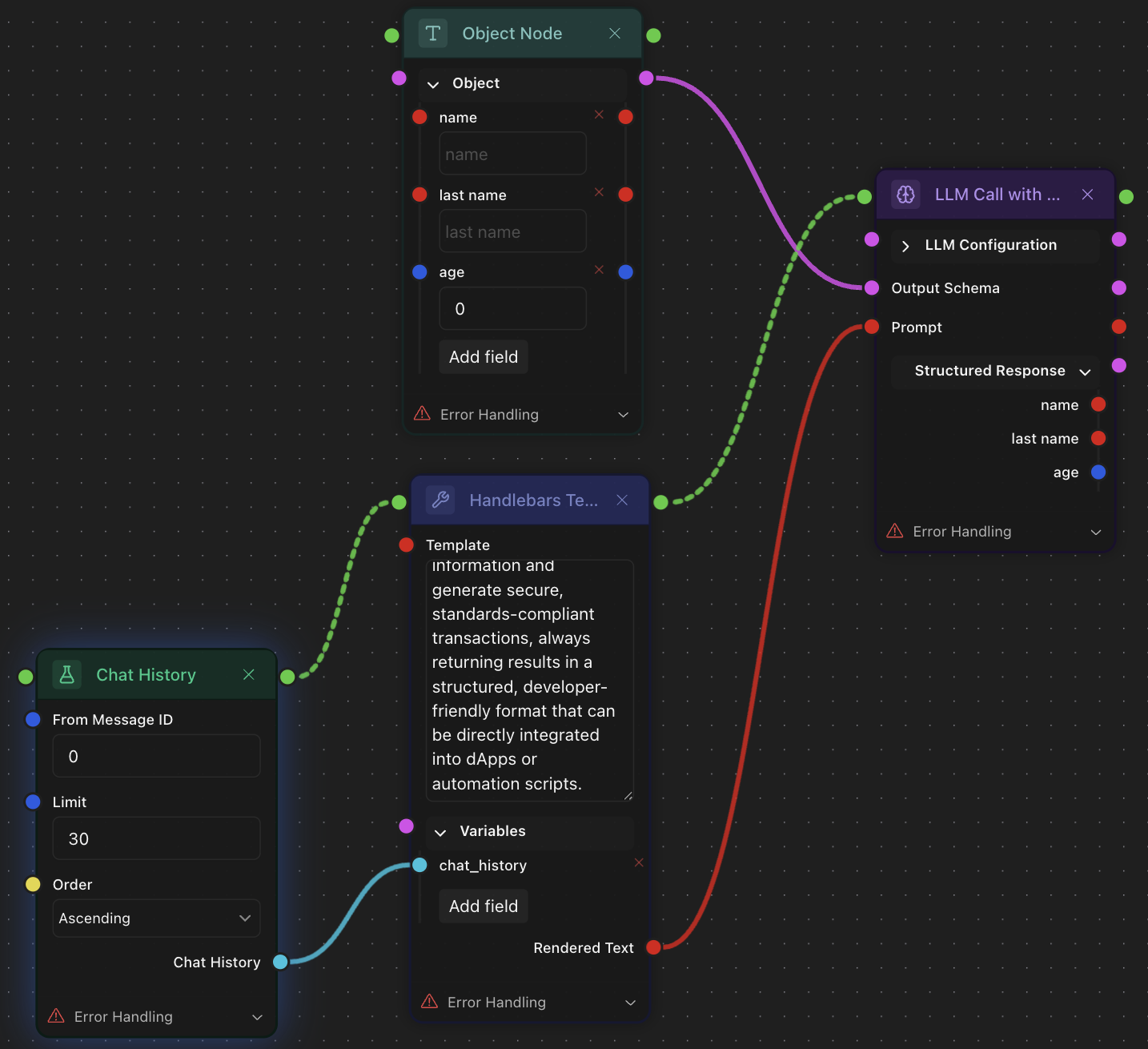

Every LLM Call requires a System Prompt. You can fill it directly in the node's Prompt text area, or use templates.md to add more context and variables inside your Prompt before sending it to the LLM.

LLM Call

The most basic node that covers general scenarios. With 20+ models (and more coming), it only expects API key and a prompt. The output is a stream. Use Stream Buffer node to convert it to a string or stream the result straight to the chat.

LLM Call with Structured Output

Similar to simple LLM Call, this one is capable of structuring output according to the schema. The schema can be configured inside the node or with another node connected to the Output Schema port – in this case the Structured Response will inherit the schema of the connected node.

Read more about how schemas work for LLM Call with Structured Output:#llm-call-with-structured-output

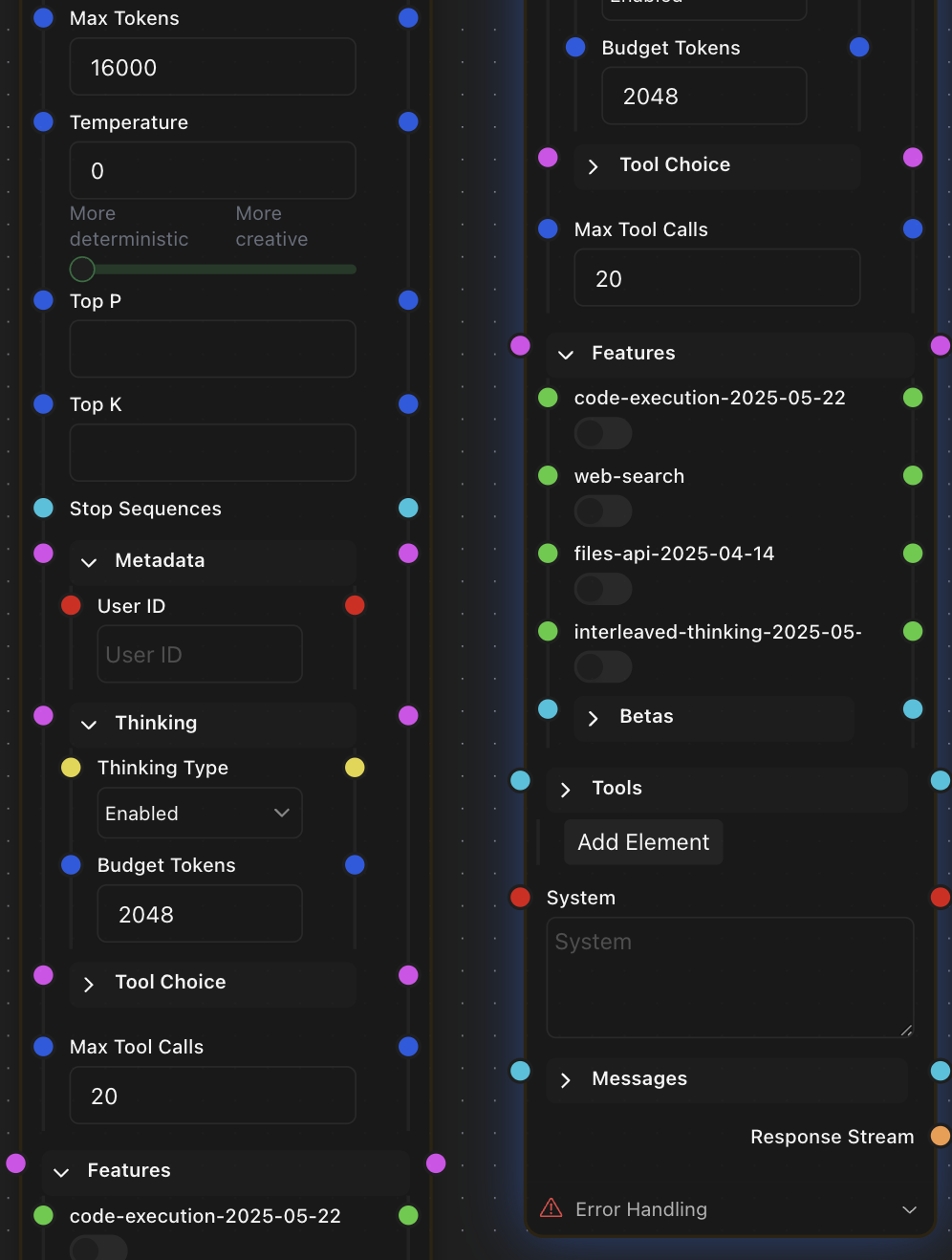

Anthropic LLM Call

Unlike other nodes, this one only features Anthropic models such as Claude Sonnet 4, Claude Opus 4, Claude 3.7 Sonnet, etc. The main difference is in the amount of configurable properties and built-in features such as tools-anthropic-llms-only.md, Web Search, Thinking and Code Execution.

Providing Chat History

Anthropic LLM Call accepts Messages Array as input. It's best to supply chat history to this special field to ensure the optimisations made by Anthropic are applied.

ArchAI Messages to Anthropic Converter was made to simplify the process of providing the Chat History and the Input Message to Anthropic LLMs. It provides the history of the chat together with a pointer to the message that has to be answered by the LLM, so you don't need to provide the text of the Input Message in the System Prompt. Other fields are:

- Skip System Messages - ones like "The agent was added to the chat"

- Skip Empty Messages

- Include Attachments (not fully implemented yet on the side of the Chat Engine)

- Message Prefix Template - used to separate chat participants, because Anthropic does not support chats with multiple agents and users. The Prefix is inserted to the beginning of every message's Text in the Anthropic Messages Array.

- Assistant ID